My Experience Building a GitLab CI/CD Pipeline to Deploy via FTP

Continuous integration and deployment are not just a fancy words, having efficient and strong pipelines can save a lot of time when developing software and maybe more important, can make software development more pleasant. Recently I had to deploy a small REST API written in php, so I had to implement quickly a deployment strategy using GitLab's CI/CD features. Here's a first-person account of how I enhanced our deployment workflow, focusing particularly on a pipeline setup designed for deploying PHP applications via FTP.

Setting the Stage

We had to use a small REST API written in PHP and it needed only minimal changes to automate its deployment. We wanted to streamline the process so updates could be deployed quickly and without manual intervention. By setting up a GitLab CI/CD pipeline, I ensured that any updates were automatically pushed to production, saving time and reducing errors, making our deployment process more efficient and reliable.

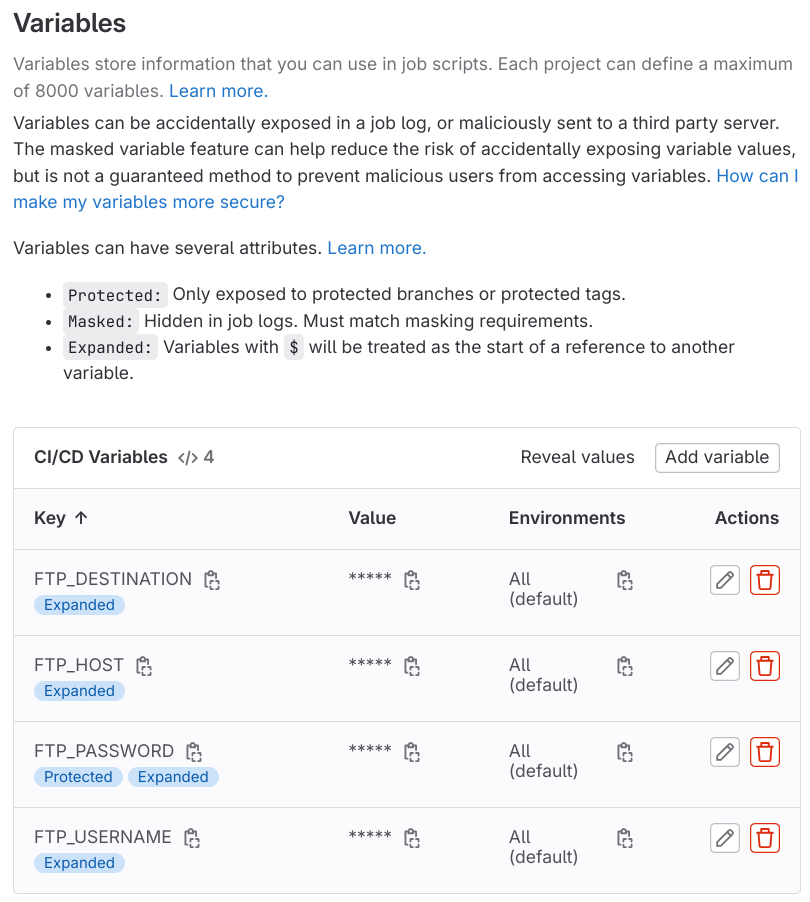

I started by setting the follwing environment variables in the GitLab web interface in project Settings > CI/CD > Variables. It allows to securely store the credentials, avoiding storing them in the repository and to easily be able to change the deployment destination by just changing the variables:

The Pipeline Breakdown

Here's a look at the specific GitLab CI/CD configuration we employed for the deployment:

deploy:

script:

- echo $FTP_USERNAME $FTP_HOST $FTP_DESTINATION

- apt-get update -qq && apt-get install -y -qq lftp

- |

lftp -c "set xfer:log true; \

set xfer:log-file ftp_detailed_transfer.log; \

set ftp:ssl-allow no; \

open -u $FTP_USERNAME,$FTP_PASSWORD $FTP_HOST; \

mirror -v ./php/ $FTP_DESTINATION --reverse --delete --ignore-time --parallel=10 \

--exclude-glob .git/ --exclude-glob .git/* --exclude-glob .gitignore \

--exclude-glob .gitlab-ci.yml \

--exclude-glob .ftpquota \

--exclude-glob ftp_transfer.log --exclude-glob ftp_error.log --exclude-glob ftp_detailed_transfer.log" \

> ftp_transfer.log 2> ftp_error.log || LFTP_EXIT_CODE=$?

- cat ftp_transfer.log

- cat ftp_error.log

- if [ -f ftp_detailed_transfer.log ]; then cat ftp_detailed_transfer.log; else echo "Detailed transfer log not created. Probably no files needed to transfer."; fi

- if [ "$LFTP_EXIT_CODE" != "" ]; then exit $LFTP_EXIT_CODE; fi

# environment:

# name: production

artifacts:

paths:

- ftp_transfer.log

- ftp_error.log

- ftp_detailed_transfer.log

only:

- master

Deployment Strategy Explained

- Installing Dependencies: We update the system and install lftp, a command-line file transfer program that supports FTP, FTPS, and other protocols. This tool is essential for our file transfer operations.

- Executing the FTP Transfer: Using lftp, we set up a mirror command that syncs our local ./php/ directory to the remote FTP destination. We included several options like --reverse for uploading, --delete to remove obsolete files, and --parallel=10 to accelerate the process by running multiple transfers simultaneously. Crucially, we exclude Git-related files and logs from the transfer to keep the remote site clean.

- We use

lftpwhich is an enhanced version on top of the ftp protocol, allowing deleting files on the destination that do not exist on the source. - We use YAML Block Scalar

|(pipeline char) to mark in the yaml that a multi line is startng and Line Continuation with\to split the ftp command on multiple lines, in order to improve readability - We set

xfer:logandxfer:log-fileto produce a detailed log file that we print in the console mirror -v ./php/ $FTP_DESTINATION --reverse: mirrors from local to remote(if no --reverse is specified it mirrors from remote to local)- we use

--exclude-globto exclude a bunch of files > ftp_transfer.log 2> ftp_error.logredirect the console output to ftp_transfer.log and the error to ftp_error.log|| LFTP_EXIT_CODE=$?captures exit status; the special variable $? holds the exit code of the last executed command in Unix-like operating systems, which in this case islftp. We prevent thelftpsending out an error exit code, because the pipeline script will be stopped on error, but we want to display and record the result. So, after displaying everything, we check the output of the lftp command and if exited with and error, we exit the script with an error.

- Logging the Process: We redirect all output to ftp_transfer.log and specify a detailed log file detailed_transfer.log for in-depth transfer details. The detail log is created only if there files transfered/deleted, this is why we need to add a condition before printing it's content in console. Using

caton a non existing file would throw and error. - Artifact Handling: We specify ftp_transfer.log as an artifact, which means GitLab saves this log for later viewing, aiding in debugging if needed.

The Outcome

This pipeline significantly improved our deployment process, making it faster and more error-resistant. Automating the upload via FTP ensured that human errors were minimized, and using lftp provided robustness against connectivity issues. Most importantly, configuring the pipeline to run only on the master branch meant that our production environment was always in sync with our most stable codebase.

Lessons Learned

Automating repetitive tasks like deployments not only saves time, but also reduces the chances of errors and provides a less boring overall experience when developing software. Additionally, detailed logs provide visibility, which is key in maintaining operational stability.

An important aspect is to make sure sensitive information is handled securely in CI/CD pipelines, and using environment variables for FTP credentials is a the norm. It keeps those details out of the source code, which is a huge step toward staying safe.

Converting a Database From Mysql to Sqlite

Check how to convert a database from MySql to Sqlite using mysql2sqlite and a docker container with MySQL and Adminer

Implementing a Simple REST API in ExpressJS and Consuming it from Client, Server or using CURL/Postman

In this tutorial we're going to implement a simple REST API in nodejs, exploring different options, by installing express directly as a npm package or using the express generator. Then we'll consume the api from browser, curl, backend or curl